For international tax law, it is of central importance how to locate, value & control the increasingly digital cross-border supply and service relationships within a multinational enterprise (MNE). This counts primarily from a tax authority perspective. Nevertheless, digitalization has not only significant effects for highly digitalized businesses but for the economy itself, as the overall intend is to boost the MNE´s efficiency & transparency and optimate its function´s performance — regardless if it is the production, marketing or research & development function.

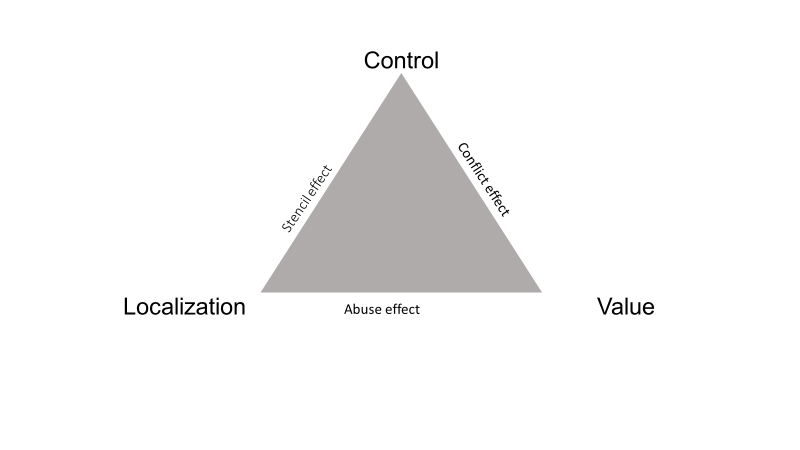

Still, particularly in the case of “data” as the tax connecting factor, locating, valuing and controlling those functions which are primarily driven by data is a tricky task. It just seems not to be possible to meet essential criteria like clear localisation, value-added-oriented distribution and proper control simultaneously – a trilemma.

But there might be a solution: digital documentation tools, e.g. process mining (PM), could act as describer of the data-driven functions. With the use of application Programming interfaces (API) as a form of bits & bytes meters, data could be counted. And in the end, PM and API could interact with blockchain approaches (BC) to verify the processes. All together, they might handle the mission impossible of the trilemma.

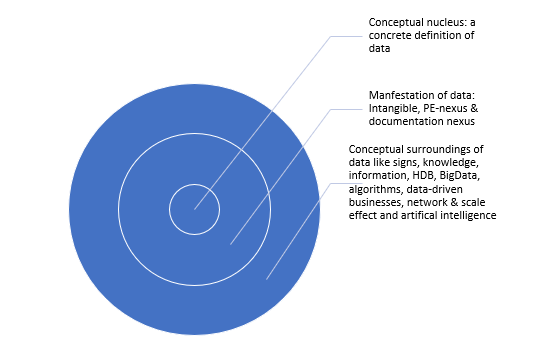

Part 1: As it is significant for those documentation techniques to define what data is in the context of international taxation, we approach this ground barrier through a proper definition of data in the first step. In the second step, we argue with the trilemma of data-driven taxation approaches and demonstrate their negative effects in form of the conflict, stencil, and abuse effect.

In Part 2 of the article, we want to show you in a third step how it might be possible to integrate a documentation system within the transfer pricing system to solve this trilemma and give an outlook on the one-million-dollar question of how to tax data properly in times of digitalization.

First step & ground barrier: a proper definition of data

Before we can even think about documenting data to solve this trilemma, we must start from the beginning: with common ground of what we understand by the term “data”.

There is no doubt that data can be broadly considered as “signs”, “information” or “knowledge”. But this rather semiotically approach does not define the conceptual nucleus of data adequately as it is too abstract for a transfer pricing documentation. From a computer science perspective, data may be caught more accurately. But in the context of international taxation, data is usually unfortunately described rather by its open conceptual surroundings like “Bigdata”, “network effect” and “highly digitalized businesses” than by a concrete definition.

Nevertheless, although there might be no concrete definition, data is primary related to persons or companies and their products in three main forms of appearance: Person related data in form e.g. of the customer base play a major role for the MNEs marketing function, as it counts the same for product related data in form e.g. of performance data for the production or R&D function. Those manifestations can either be an intangible, a nexus for a permanent establishment (PE), or a nexus for documentation approaches.

Data as an intangible

The first manifestation – data as an intangible – is already accepted worldwide, e.g. by the OECD, although it is still unclear how to adequately assess the value of data.[1] Nevertheless, as the market seems to be imperfect or at least we do not know enough about the inter-party-value of data, there are different approaches to tackle this problem. None of them will give the answer to the 1-million-dollar question of how much is data worth. None of them will exactly measure the value of data. All of them are only auxiliary tools and not magical algorithms calculating a final number. Current approaches could be playing-theory approaches or the rather formulaic documentation approach like we are trying in making digital services comparable by using digital tools as shown further.

Data as a PE nexus

The second manifestation – data as a PE nexus – is a rather new question and has been in discussion as part of the challenge of the undertaxed highly digitalized businesses.[2] Its underlying problem is how to attribute profits of MNE between states if one does not need a physical presence in the market state anymore in times of the increasing digitalization. In this case, data is considered as an anchor point for broadening the definition of a (digital) PE.

Data as a documentation nexus

The third manifestation – data as a documentation nexus – also considers data as an anchor point.[3] But in contrast to the PE-nexus debate, data is only used as a nexus for documentation approaches like the ones the MNEs controlling department has been dealing with since decades in identifying and controlling the MNEs supply chain. In this case, data could be used for showing transparently the functions & risk profile of an MNE and its global value chain eco system, by using tools like process mining and blockchain approaches in combination with Application Programming Interfaces ( But more info later in the second step).

Nevertheless, the discussion of if and how-to tax data has just begun and faces significant challenges. Because the question asked in the beginning remains: how do we measure and therefore value data, how can we locate it, and maybe more importantly — how can it be controlled? But this trilemma is something we want to show you in the second step, by thinking about how to integrate digital documentation tools in the transfer pricing documentation.

Second step: The trilemma of data-driven taxation approaches and its effects

In the first step, we discussed the ground barrier for taxing data in the context of international taxation: a proper definition of data. In the second step, we want to show you which negative effects result from this trilemma.

This form of trilemma is a problem, all of the manifestations of data in the context of international taxation face. “Data as an intangible” cannot be measured and above all controlled without adequately documenting it. The same counts for the “Data as an PE nexus”-question: without knowing the value of data, profit allocation is hard to imagine even if it is possible to unambiguously allocate the data-driven functions to the respective jurisdiction and (!) control it properly without giving birth to a new form a digital BEPS through data. And even if you want to use data as a documentation nexus, you heavily rely on an adequate localisation and easily accessible control mechanism.

This trilemma is characterized by three effects — the conflict effect, the abuse effect, and the stencil effect — which we want to show you in the following.

The conflict effect

Without clear localization and therefore without undisputed allocation of taxation rights, even a proper control in combination with the respective adequate value contributions must result in a collision of clashing interests of the involved tax authorities.

Just imagine the situation when you (and everyone else) knows the (more or less) exact or at least undoubted value of this data driven function. Additionally, you actually understand and therefore are able to control it. But you (as a tax authority) can simply not say if the functions origin is within your nations right to tax or not. And now think of all the other tax authorities around the globe who are in the same troubled position. How many arbitration bodies will be necessary to solve this conflict? Probably enough to give way for another Big4.

The abuse effect

Without proper control, even a proper localization and value-oriented attribution must result in significant (abusive) design scope of the involved MNE.

Now imagine you are in the following position: you are capable to properly value and actually locate the data-driven functions exactly. You, e.g. as a tax authority, know that exactly those functions are something you have to consider. But because of some reasons, like a lack of transparency of the documentation of the MNE´s functions & risk profile or simply a lack of administrative skills of your own IT, you cannot control it. Not at all. From the MNE´s perspective on the other hand, this might be a great reason to adjust the transfer price to its own advantage. So, a new form of BEPS with data as the new unpredictable super intangible born to “reform” the arm´s length principle has risen – and the OECD gets another decade for proposals regarding taxing the digitalized economy.

The stencil effect

Without adequately determining the respective value contributions, even a proper control in combination with a localization is not enough for a value-oriented allocation of profits, and must result in determining the transfer price too imprecise.

In this case, you might have no trouble to actually reproduce and control the MNE´s data driven functions. Additionally, you might be even in the position to locate the whole thing and prevent you and all the other involved parties in an arbitration procedure. But without giving the function a name or rather a value, transfer pricing seems to be a little bit tricky. Because all you can do is to determine the value in stereotypes (or stencils), but far enough from the arm´s length principle.

Interim Conclusion

So, we know what data is in the context of international taxation, and we know how hard it is to locate, value and control data-driven functions of the increasingly digitalized economy – but what are the possible solutions? We think, a proper taxation needs a proper documentation – but that is something we want to elaborate in Part 2.

******************************************************

* The team to tax data:

Jan Winterhalter is a tax lawyer & PhD cand. in International taxation at the Institute for Tax Law under Professor Dr. Marc Desens and a research fellow & scholar of the Heinrich Böll Foundation. His resent research at the crossroads of economy, philosophy & computer science revolves around current reforms of the International Tax System with focus in data-driven business models and the digital nexus. He is currently working on digital documentation systems using process mining and blockchain methods. For more information, see https://www.researchgate.net/profile/Jan_Winterhalter and http://www.cluster-transformation.org/en/start.

Andreas Niekler is a research assistant & computer science lecturer at the Institute of Computer Science at the University of Leipzig, who works, among other things, in the project group “Data Mining and Value Creation”. He studied Media Technology at HTWK Leipzig and the University of West Scotland. After two years working as a freelance Programmer and lecturer at the Leipzig School of Media, he joined the NLP group at the University of Leipzig. During his doctorate, he used Bayesian models and clustering techniques to analyse, how topics can be captured within texts. Within his research at Leipzig University he created and successfully published novel approaches, methodologies and interfaces to make large digital text collections accessible to content analysis research, see http://asv.informatik.uni-leipzig.de/en/staff/Andreas_Niekler.

Together, they established the idea of this trilemma (which has its origins in the article Winterhalter/Niekler, Digitax 2020, Vol.1 p.49-53), see https://processtax.github.io/.

Contact via jan.winterhalter@uni-leipzig.

[1] OECD (2015), Addressing the Tax Challenges of the Digital Economy, Action 1 – 2015 Final Report, OECD/G20 Base Erosion and Profit Shifting Project, OECD Publishing, Paris, pp.99-101.

[2] Ibid., p.102.

[3] Winterhalter / Niekler, Das Trilemma datenbasierter Besteuerungsansätze, Digitax 2020, Vol. 1, p.59-63.

________________________

To make sure you do not miss out on regular updates from the Kluwer International Tax Blog, please subscribe here.